Best Practices for Data Preprocessing in Machine Learning

Data preprocessing is like cleaning the dirt off of a gemstone, it may not glamorous part of the process, but is essential to revealing beauty of data

Data preprocessing is a crucial step in any data science project. It involves cleaning, transforming, and organizing raw data to make it more suitable for analysis. We will discuss the importance of data preprocessing in this article, as well as some commonly used preprocessing techniques.

Why is data preprocessing important?

There are often errors, inconsistencies, and missing values in raw data collected from various sources, making it difficult to analyze. Preprocessing helps in identifying and fixing these issues, improving the accuracy of the analysis. Preprocessing also makes the data more manageable by reducing its size, making it easier to work with.

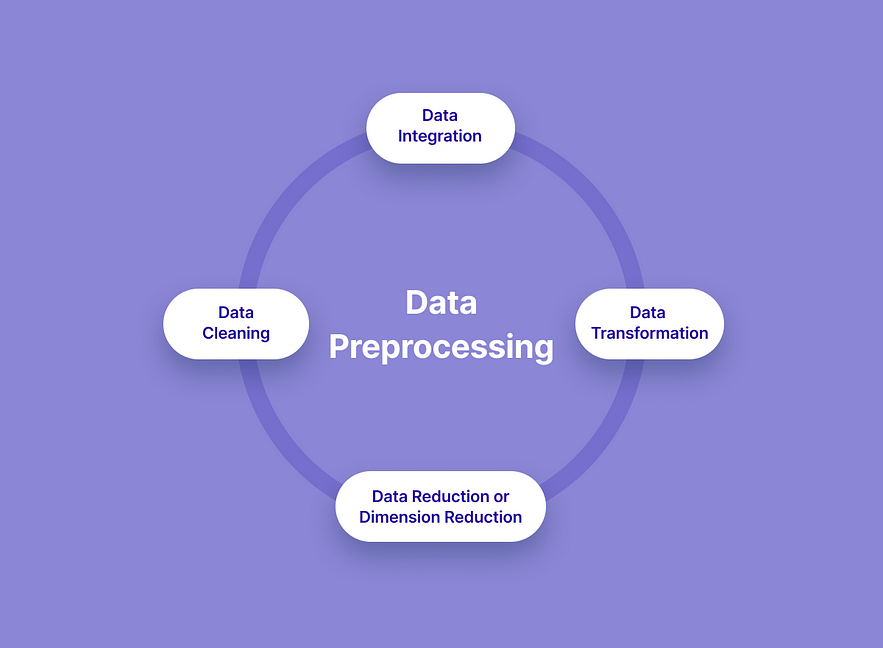

Common Techniques Used in Data Preprocessing:

Data Cleaning: This involves identifying and correcting errors, inconsistencies, and missing values in the data. In data cleaning, techniques such as data imputation, outlier detection, and normalization are commonly used.

Data Transformation: This involves transforming data into a format suitable for analysis. Feature extraction, scaling, and encoding are some of the techniques used in data transformation.

Data Integration: This involves combining data from different sources into a single dataset. Considering the fact that different formats and structures can be used to store data, this can be a challenging task.

Data Reduction: This involves reducing the size of the data while retaining its important features. When dealing with large datasets that are difficult to work with, this is especially important. Some common techniques used in data reduction are sampling and dimensionality reduction.

Challenges in Data Preprocessing:

Data Quality: The quality of data is crucial to the success of any data science project. Poor data quality can result in inaccurate analysis and faulty conclusions. Although data preprocessing can help identify and fix issues with data quality, it can also be resource- and time-consuming.

Data Volume: The volume of data generated by organizations is increasing rapidly, making it difficult to process and analyze. The preprocessing of data can reduce data size, but it can also lead to information loss if not done correctly.

Data Complexity: Data collected from different sources may have different structures, making it difficult to integrate and analyze. Preprocessing data can improve the quality of data by transforming it into a format that is more suitable for analysis, but it can also be challenging and require domain knowledge.

Conclusion

Data preprocessing is crucial in any data science project. It involves cleaning, transforming, and organizing raw data to make it more suitable for analysis. Common techniques used in preprocessing include data cleaning, transformation, integration, and reduction. While data preprocessing can help enhance the accuracy of analysis, it also comes with its challenges, such as data quality, volume, and complexity. As the volume and complexity of data continue to increase, the importance of data preprocessing in ensuring the success of data science projects cannot be overemphasized.